Today's AI is built on an outdated model of biology.

Modern neuroscience has revealed that dendrites, and not neurons, are the core computational unit of biological brains. Perforated AI's artificial dendrites emulate the non-linear integration and processing capabilities of biological dendrites, allowing artificial neuron modules to perform complex computations.

By introducing artificial dendrites into existing neural networks, we unlock the ability to reduce error by 15 to 20 percent with the same neuron architectures on the same datasets. The added parameters also come with significantly higher efficiency than just adding more traditional neuron nodes. This means by starting an experiment with a reduced model, compression levels of up to 90% can be achieved while maintaining or even improving accuracy.

Start Experimenting

Our Github repository provides the ability to add artificial dendrites to PyTorch neural networks. The supplemental Perforated BackpropagationTM library boosts performance to the next level by training dendrites with our proprietary approaches rather than standard gradient descent.

Much current research in this space shows the significant impact of leveraging modern neuroscience to improve modern AI with this method. However, other research either does not contain open source implementations, or only provides the exact architecture for the exact experiments in their project. Other research also only discusses training dendrites with standard backpropagation, missing the improvements achievable by alternative dendritic learning rules.

With under an hour of coding you can add dendrites to any existing PyTorch project and advance your own research by joining us on the cutting edge of ML theory.

Unlock AI 2.0 with Perforated BackpropagationTM

Perforated AI's critical breakthroughs in artificial dendrites form the basis of our patented technologies.

Perforated BackpropagationTM leverages functionality of biological dendrites by introducing a new kind of dendrite-enhanced artificial neuron. These neurons function as plug and play replacements for existing neurons and can be added to any PyTorch learning system. Including dendrites in this way allows each neuron to maintain the same role it had in the original network, and by empowering each neuron with improved performance for its original role, Perforated BackpropagationTM empowers the network as a whole to achieve unprecedented results with under an hour of coding.

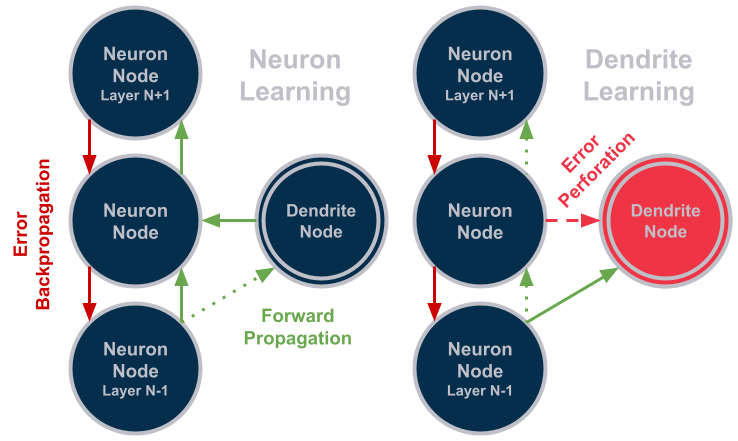

These new architectures remain the same as the old ones, with each neuron in the system passing data forward between neurons and passing error backwards between neurons. However, in the new architectures each neuron also receives a set of artificial dendrites which are outside of the system. These dendrites stand apart, completely removed from the neuron-to-neuron error signaling, while still providing support to empower each neuron during the forward processing of data.

Our open source and paid offerings both exist as downloadable libraries that can be run entirely offline. Our offering is a tool for you to use, not a SAAS platform where you need to risk the privacy of your data or your code. We empower you to make your own networks better, without introducing any additional risks to security

By working with your original systems you will retain the benefits from your previous research, while boosting existing models a powerful step further. This enables you to achieve results today that might be years away without dendritic optimization.

This figure shows one non-limiting implementation of how dendrites can plug into traditional neural networks with Perforated BackpropagationTM. Neuron Learning shows how neurons can train after dendrites have been added. Dendrite Training shows how dendrites receive a learning signal perforated from the error a neuron has calculated. Our patent protected algorithm enables dendrite modules to learn using that learning signal and any alternative ML method. In the open source option nodes exist in the same locations, but with typical connectivity and standard backpropagation for the forward and backward steps. This means new dendrites follow the same learning rules as if they were more neurons simply in a dendrite inspired architecture, providing substantial benefits while Perforated BackpropagationTM unlocks even more transformative results.