This testimonial comes from a team working with an AI model called MobileNet which was originally developed by Google. MobileNet is a compute optimized architecture built to run computer vision applications on the restricted hardware of edge devices. Over the course of our hackathon the team was able to optimize the model for both accuracy and compression with Perforated BackpropagationTM.

The Team

This project was a collaboration between Rushi, a Data Engineer at Deloitte, and Rowan, an undergraduate student at University of Pittsburgh. With a shared interest in efficient deep learning, they set out to explore how compact neural networks can be enhanced through advanced fine-tuning techniques without relying on heavyweight architectures.

Their project used MobileNet, a streamlined convolutional neural network designed by Google for speed and resource efficiency. Experimenting with the CIFAR-10 dataset of 60,000 images across 10 classes, the goal was to see how far they could push classification performance using minimal compute.

The Results

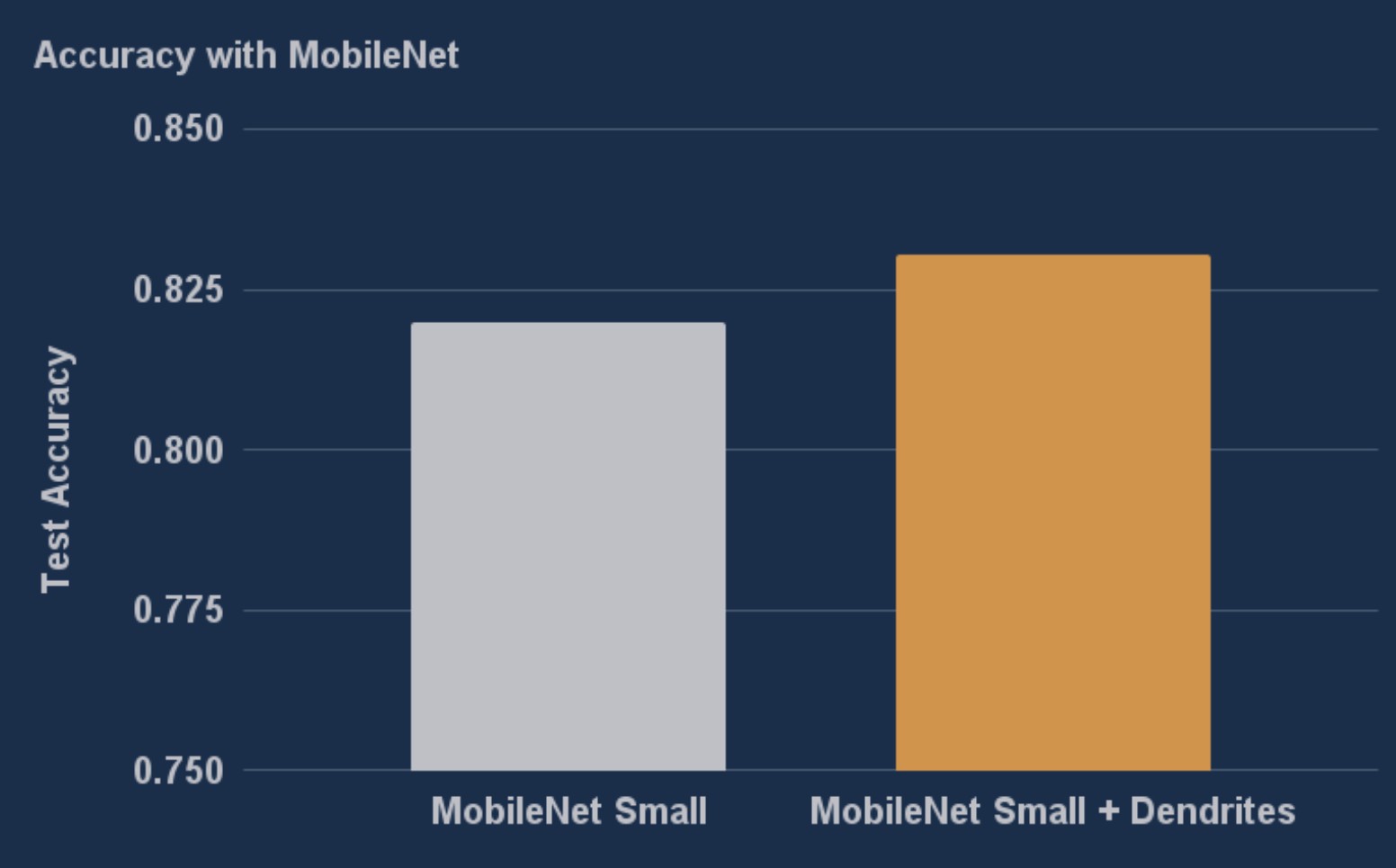

The real game changer was Perforated AI's dendrite based fine-tuning, which allowed them to optimize models far beyond their expected performance limits. On the MobileNet-Small model, they saw a clear performance gain of 6% relative improvement highlighting how effective Perforated AI's fine-tuning can be, even on models that are already highly optimized.

Adding Perforated BackpropagationTM reduces error by 6%

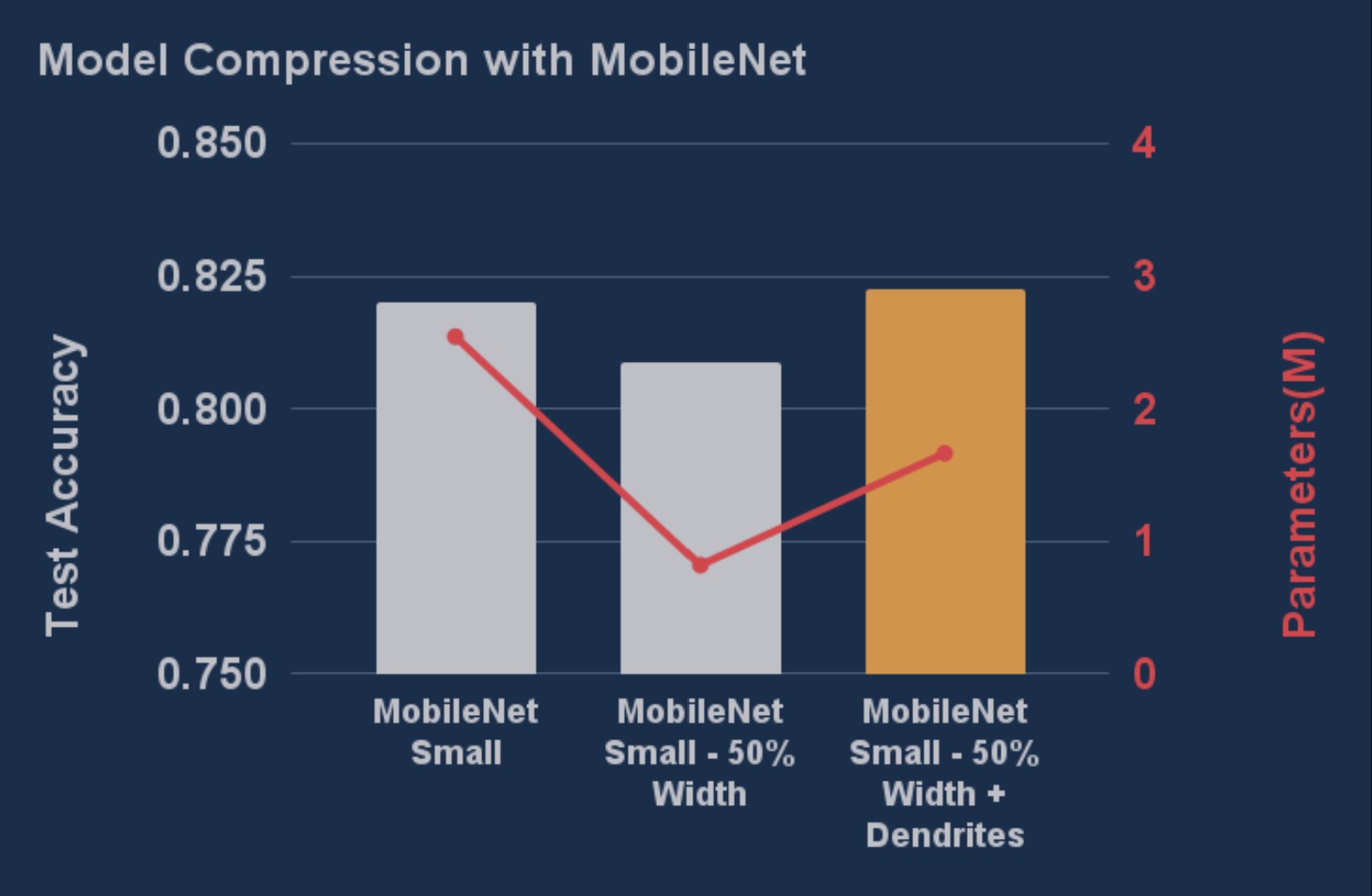

After seeing these accuracy gains, the team selected parameters to create a MobileNet-Extra-Small variant. Using this new architecture in combination with Perforated BackpropagationTM they brought the parameter count down from 2.54 million to just 1.66 million and still crushed the original performance, pushing the accuracy to 82.25%.

Implementation Experience

The technology was easily and quickly implemented, expanding on the base PyTorch training pipeline. After optimization, scaling up only required minimal code changes, drastically reducing barriers and allowing for seamless integration without system overhauls.

Adding Perforated BackpropagationTM creates improved accuracy using a model with 35% fewer parameters.

What the Team Said

Seeing a model that small perform that well was honestly surprising. It really changes the way we think about deploying AI on limited hardware.

- Rowan Morse

For anyone building on a budget, or targeting mobile and embedded devices, this is a direction worth exploring.

- Rushi Chaudhari

Ready to Optimize Your Models?

See how Perforated BackpropagationTM can improve your neural networks

Get Started Today