This case study comes from a client research team that improved the predictive power of a variation of BERT model adapted to work with amino acid sequences rather than natural language. The client was able to achieve the predictive power of the original model with 21% the original parameters.

About the Research Team

Jingyao Chen, Xirui Liu and Zoey You are computational biology experts. While commonly used tools like BERT offer substantial potential as large models, the clients recognized that for certain complex functions, simplification could be beneficial. With the assistance of Dendrite technology, the client team developed a more computation-friendly approach for models requiring fine-tuning.

ProteinBert was selected as the base protein BERT model which employs projection techniques to construct a more compact architecture. The model is trained on protein sequences and has smaller vocabularies (20 amino acids + special tokens) but longer context to handle the long protein sequences.

The Results

Through this optimization, the model was successfully reduced in depth from 30 layers to just 12 layers, while simultaneously decreasing the hidden layer width from 1024 to 480. The client team used the same dataset as the original AMP-BERT used, containing 1,778 antimicrobial peptides and 1,778 non-antimicrobial peptides, with each sequence labeled as AMP (1) or non-AMP (0).

Their goal is to train a binary classifier to predict whether a given protein sequence is antimicrobial or not. Using this streamlined model, they conducted limited pre-training on the Uniref50 dataset before fine-tuning with the AMP dataset.

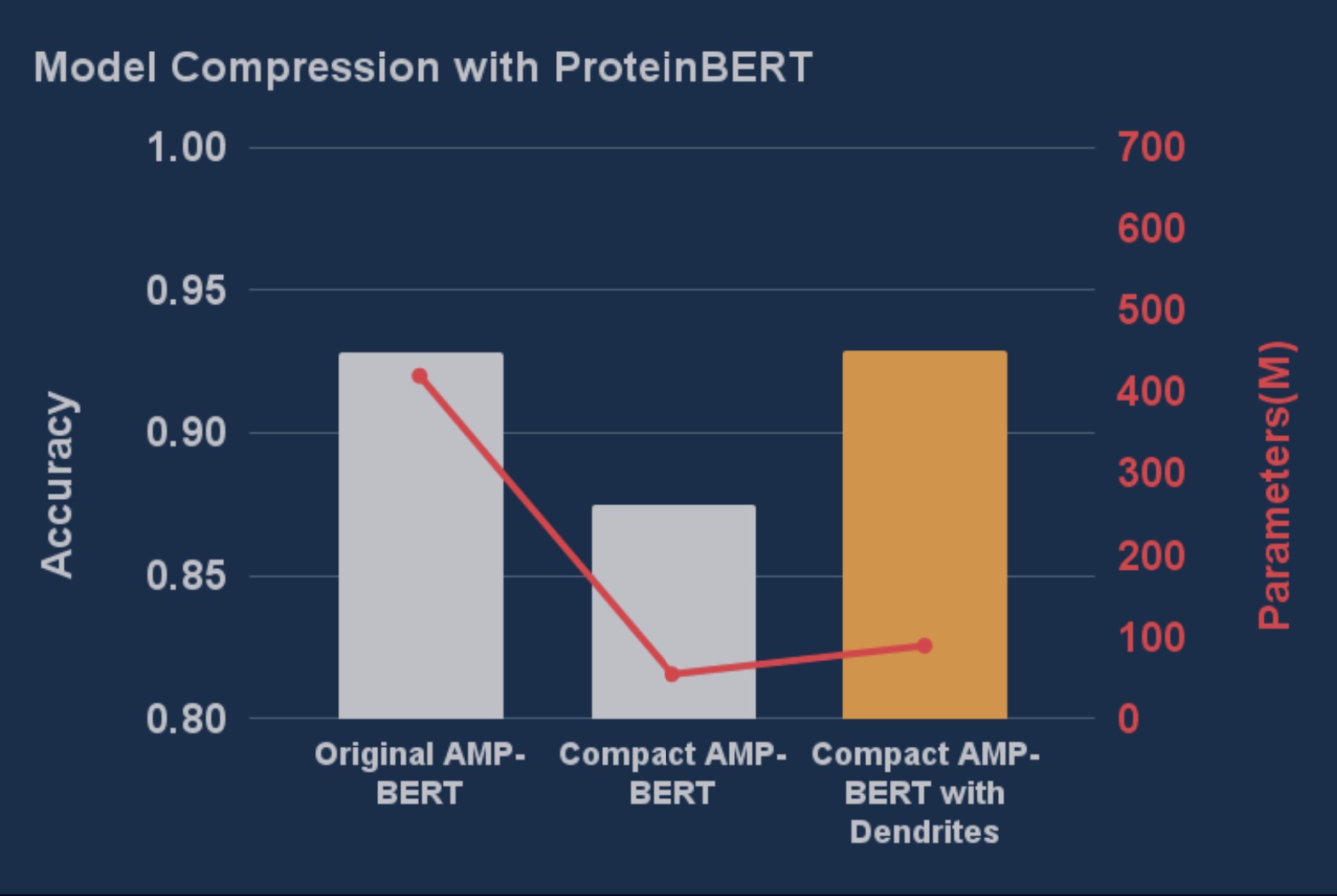

Adding PB allows equal accuracy with 21% the parameters

Implementation Experience

The client team's model achieved identical F1 scores on the test set while utilizing only 21.2% of the original parameters. This dramatic reduction in model size while maintaining performance represents a significant breakthrough in computational biology applications.

What Jingyao Said

Fine-tuning updates only a small fraction of a large model's parameters, and Perforated AI's dendrite mechanism made me immediately recognize its strong connection to efficient fine-tuning. I decided to give it a try; and even with a dramatically reduced model size, the system helped us maintain performance. This kind of result was unimaginable with traditional methods!

- Jingyao Chen

Ready to Optimize Your Models?

See how Perforated BackpropagationTM can improve your neural networks

Get Started Today