The Challenge

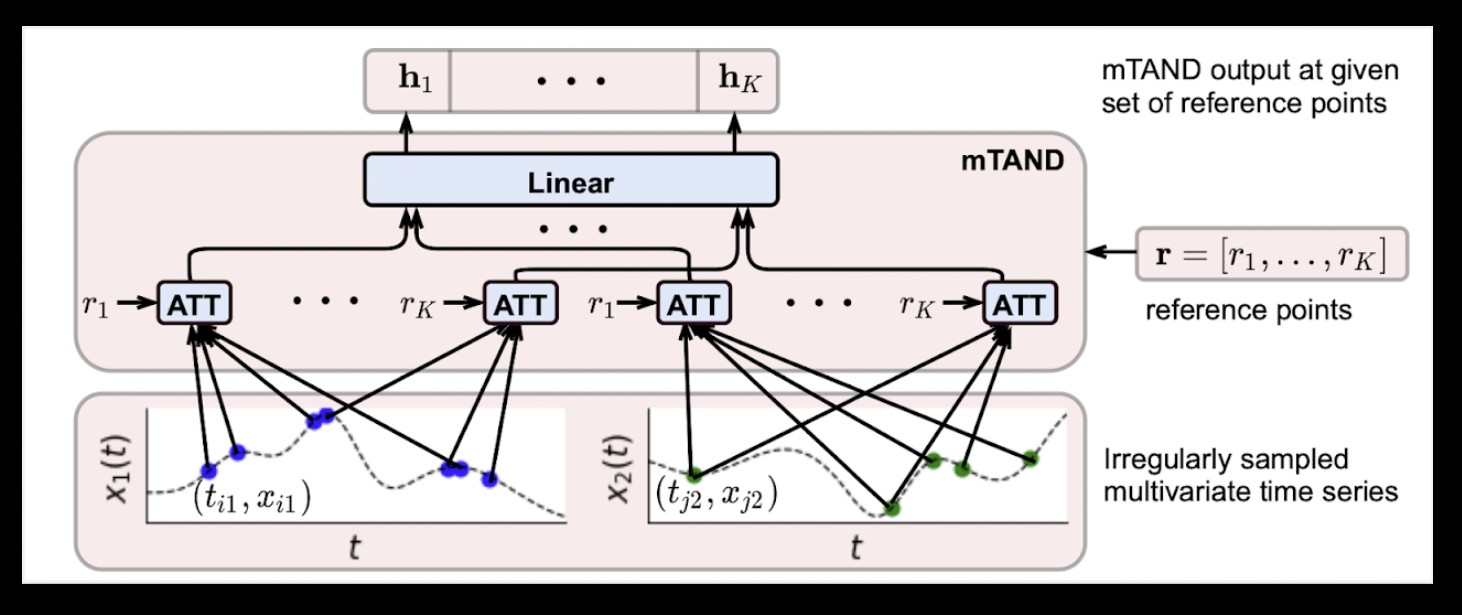

The PhysioNet dataset presents unique challenges for ML systems predicting patient outcomes in intensive care units. With only 4,000 datapoints and up to 42 variables, including six general descriptors and multiple irregularly sampled time series observations, the dataset requires models that can handle sparse, irregular data efficiently. We used mTAND (Multi-Time Attention Networks), the state-of-the-art open-source architecture on this benchmark at the time of testing.

The Results

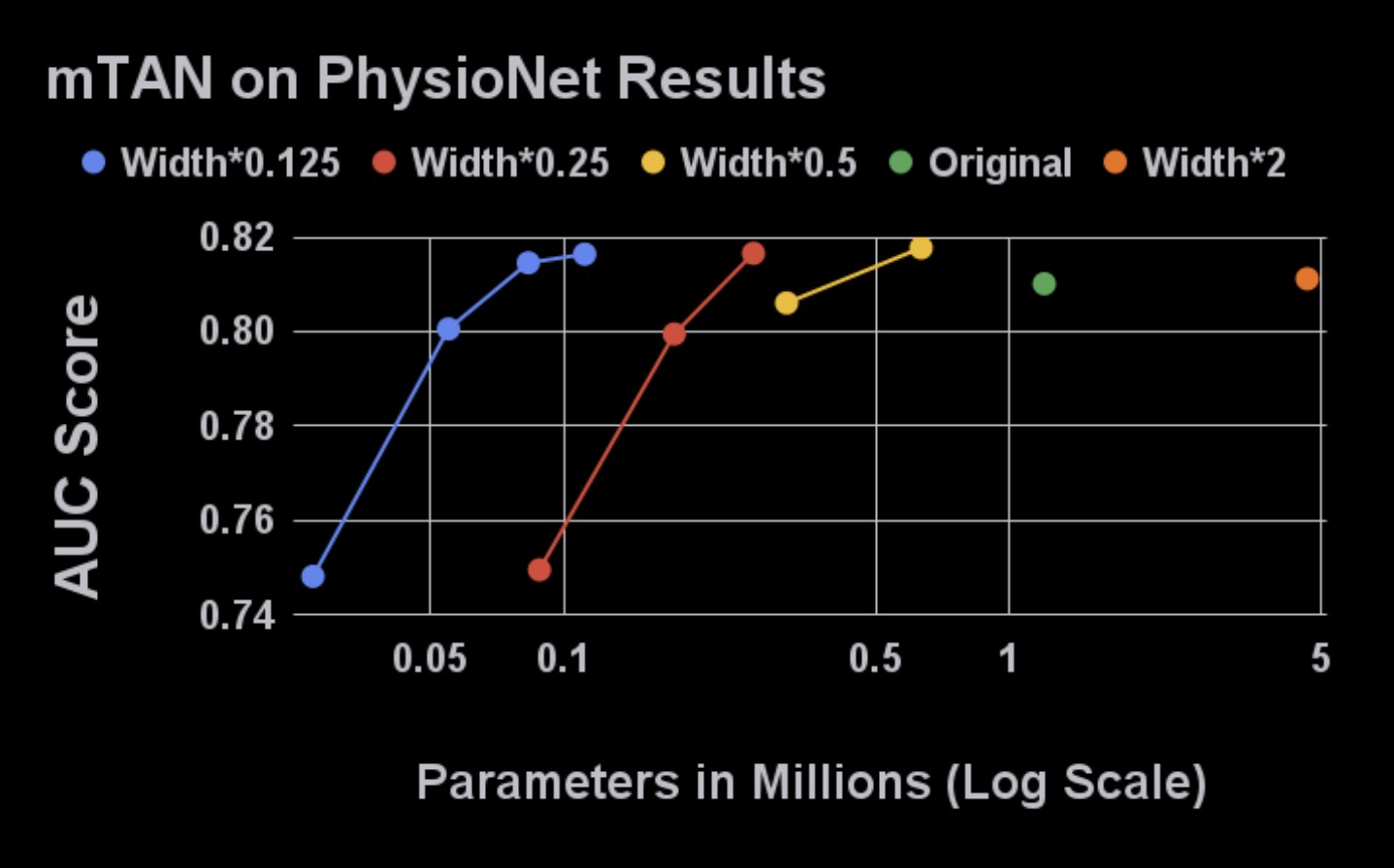

Initial integration of Perforated BackpropagationTM with the original mTAND architecture led to overfitting. However, by reducing the network width by a constant factor and then adding Perforated Backpropagation TM , with no other modifications to depth or training parameters, we achieved remarkable efficiency gains. The final network was just 9.3% the size of the original while improving Test AUC by 4%. Notably, simply doubling the original network width had minimal effect on accuracy, demonstrating that traditional scaling approaches were ineffective.

Real-World Impact

These improvements are particularly significant for healthcare applications where model efficiency directly impacts deployment feasibility. A model that is 10x smaller while being more accurate can run on edge devices in clinical settings, enable faster predictions during critical moments, and reduce computational costs, all while maintaining the high performance standards required for patient care decisions.

Ready to Optimize Your Models?

See how Perforated BackpropagationTM can improve your neural networks

Get Started Today